EDIT: Updated for EHC 4.1.2

Architecting large scale cloud solutions using VMware products have several maximums and limits when it comes to scalability of the different components. People tend to look at only vSphere limits, but the cloud also has several other systems with different kind of limits to consider. In addition to vSphere, we have limits with NSX, vRO, vRA, vROps and the underlying storage. Even some of the management packs for vROps have limitations that can affect large scale clouds. Taking everything into consideration requires quite a lot of tech manual surfing to get all the limitations together. Let’s inspect a maxed out EHC 4.1.2 configuration and see where the limitations are.

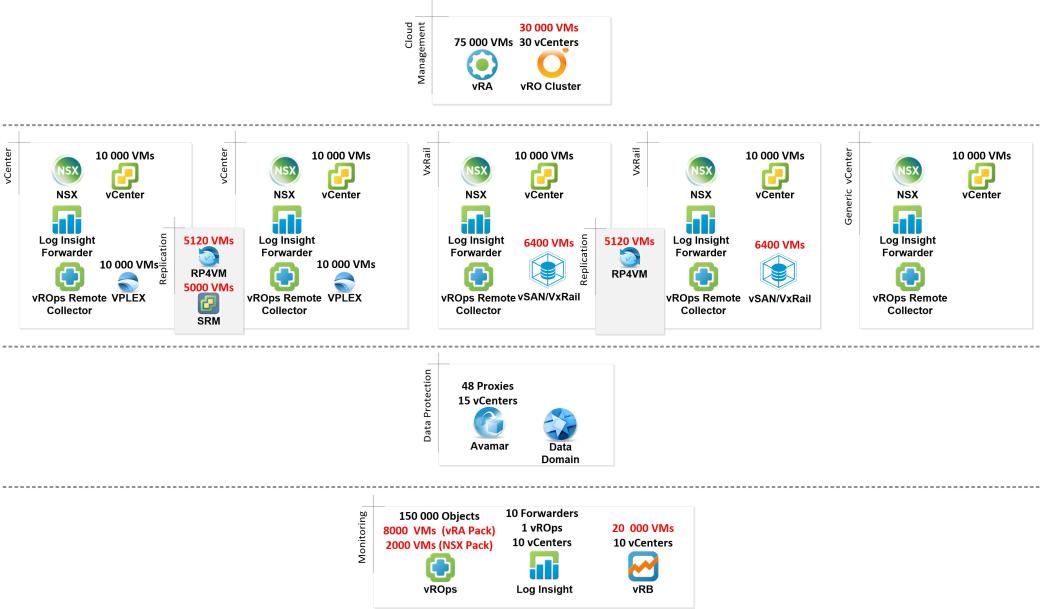

The above design contains pretty much everything you can throw a VMware based cloud design at. The design is based on EHC, so some limitations are from internal design choices, but almost all of the limitations are relevant to all VMware clouds.

Start from the top. vRA 7.3 can handle 75 000 VMs. Enough for you? Things are not that rosy I’m afraid. Yes, you can add 75 000 VMs under vRA management and it will work. It’s the underlying infrastructure that is going to cause you some grey hair. Even vRealize Orchestrator cannot support 75 000 VMs. Instead, it can handle either 35 000 VMs in standalone install, or 30 000 VMs in a cluster mode with 2-nodes. Cluster mode is the standard with EHC, so for our design, 30 000 VMs is a limitation. This limit only affects if your VMs are under vRO’s management, for example they are utilizing EHC Backup-as-a-Service. You could have VMs outside of vRO of course, so in theory you could also achieve the max VM count. Additional vRO server is an option, but for EHC, we use only one instance for our orchestration needs. Anything beyond that is outside of the scope of EHC.

Next let’s look at the vCenter blocks of our design. A single vCenter can go up to 10 000 powered on VMs, 15 000 in total. So just slap 5 of those under vRA and good to go, right? Wrong! There are plenty of other limiting factors like 2048 powered on VMs per datastore with VMware HA turned on, but also things like 1000 VMs per ESXi host and 64 hosts per cluster. These usually won’t be a problem. With EHC, you can have maximum of 4 vCenters with full EHC Services and 6 vCenters outside of EHC Services. You can max out vRA, but you can only have EHC Services for 40 000 VMs. When we take vRO into account, the limit drops to 30 000 VM. You can still have 20 000 VMs outside of these services on other vCenters, no problem.

Inside the vCenter block we have other components besides just vCenter. NSX follows the vSphere 6 limits, so it doesn’t cause any issues. NSX Manager is mapped 1:1 with vCenter, so officially single vCenter limits apply. You can add multiple vCenters to vRA, so overall limit will not be lowered by NSX. If your design consists of thousand of VMs, contact VMware support for accurate NSX scalability details. In addition to NSX, we have two collectors for monitoring, Log Insight Forwarder and vROps Remote Collector. Both have some limitations, but they don’t affect the 10 000 VM limit for the block.

As always, storage is a big part of infrastructure design. Depending on your underlying array and replication method, you might not achieve the full 10 000 VMs from vCenter. For example, vSAN can only have one datastore per cluster. As said before, with the combination of HA, the limit per cluster is 2048 powered on VMs with older vSAN versions. However, this limit doesn’t apply to vSAN 6.x anymore. Now the maximum for vSAN cluster is 6400 VMs, and all can be powered on. You can also have only 200 VMs per host with vSAN based solutions. On a normal cluster the limit is 1024. If you use a vSAN based appliance such as Dell EMC VxRail, the vCenter limit drops to 6400 VMs since you can only have one cluster and one datastore.

You most likely want to protect your VMs across sites. There are two methods for this with EHC: Continuous Availability (aka VPLEX/vMSC) and Disaster Recovery (aka RP4VM). The first option, EHC CA, doesn’t limit your vCenter maximum. VPLEX follows vCenter limits the same way as NSX does. EHC supports 4 vCenters with VPLEX, so that brings the total of CA protected VMs to 40 000 VMs. Again, vRO limits your options a bit to 30 000 VMs, and yes, you can have VMs outside of VPLEX protection in a separate cluster and separate vCenters. You could have 4 vCenters with 30 000 protected VMs in total with VPLEX, and on top of that 20 000 VMs outside of EHC.

For EHC DR, the go-to option is to use RecoverPoint for VMs. RP4VM does not use VMware SRM, but it has its own limits. The maximum for a vCenter pair is 5120 VMs with RP4VM 5.0. These limits will grow with the upcoming RP4VM 5.1 release later this year. You can have two vCenter pairs in EHC with RP4VM, so then the total protected VMs would be 10240. You can have both replicated and non-replicated VMs in the same cluster, so the overall limit is not affected beyond vRO. We do support physical RecoverPoint appliances with VMware SRM as well. SRM can support up to 5000 VMs, and you can use SRM in 1 vCenter pair only. You can have non-replicated clusters with replicated ones, so the overall limit can still be high. With the combination of RP4VM and SRM, you could have up to 10120 protected VMs between 2 vCenters and 5120 protected VMs between 2 other vCenters, so in total 15240 DR protected VMs in the system.

In addition to replication, backup is crucial as well. Backup design can have interesting side affects. Avamar doesn’t have a fixed VM limit, since ingesting backup data doesn’t have much to do with VM count, but data change rate does. Backup system limit has to be calculated using backup window, amount of backup proxies and the data change rate. You can have up to 48 proxies associated with an Avamar grid. Each proxy can backup/restore simultaneously 8 VMs, so total is 384 VMs. This limit is not fixed, but it’s not recommended to change it. So any given moment, you can backup 384 VMs. If your backup windows is 8 hours, and 1 VM takes 10 minutes to backup, your maximum is 18432 VMs inside the backup window (assuming all 384 VMs start and finish during 10 minutes). There’s a lot of assumptions in the calculations, so be careful when designing the backup infrastructure. You can obviously have many Avamar grids if needed.

If you thought that was complex, wait until we get to the monitoring block. You wouldn’t think that monitoring is a limiting factor, but you would be wrong. There are some interesting caveats that should be at least known and taken into consideration. Obviously the platform limits are what really counts, but monitoring is a huge part of a working cloud environment. Log Insight doesn’t really have VM limitations. It only cares about incoming events (Events Per Second, EPS). There is a calculator out there to help with the sizing. You can connect up to 10 vCenters, 10 Forwarders, 1 vROps and 1 AD among other things to a single Log Insight instance. Our design uses Log Insight Forwarders to gather data from vCenters and ship it to a main cluster.

vROps is another matter. Whereas the vROps cluster can ingest huge amounts of VMs (150 000 objects with maximum config), the Management Packs can become a bottle neck. vRealize Automation Management Pack can handle 8000 VMs when using vRA 7.x, and 1000 VMs with vRA 6.2. That’s quite a lot less than the 50 000 VMs vRA can support. It would be nice to have all these VMs monitored, right? NSX Management Pack also has a limitation of just 2000 NSX objects, but they also say that this is the testing limit and it will work beyond 2000 VMs and 300 edges. This is probably true with vRA Management Pack as well, but it is not stated in the docs.

Finally, vRealize Business for Cloud adds another limit to the mix. It can handle up to 20 000 VMs across 10 vCenters. Again, this will limit the overall amount of VMs in the system, if all of them need to be monitored. Unfortunately there is no way to exclude some the VMs in vRA, all are monitored by vRB. You can opt out to leave some vCenters outside of vRB monitoring. Combining this limit with others in this post, the total limit comes down to 20 000 VMs, and even lower if you want them to monitored by vROps. There are ways to go beyond the limits by just not monitoring all of the vCenters or adding more VMs than is supported and taking a risk. The last part is not recommended of course.

As you can see, the limitations are all around us. You are golden up to 2000 VMs, but after that you really need to think what you need to accomplish and do some serious sizing. Well, maybe a bit before that..

| EHC 4.1.2 | ||||

|---|---|---|---|---|

| Component | VM Limitation | vCenter Limitation | Other | Source |

| vCenter 6.0 U3b | 10 000 VMs (Powered On) 15 000 VMs (Registered) 8000 VMs per Cluster 2048 Powered On VMs on single Datastore with HA |

64 ESXis per Cluster 500 ESXis per DC 1000 ESXi hosts |

vSphere 6 Configuration Maximums | |

| vRA 7.3 | 75 000 VMs | 1 vRO instance per tenant (XaaS limitation)

20 vSphere Endpoints |

EHC: 1 tenant allowed with EHC Services | vRealize Automation Reference Architecture |

| vRO 7.3 | 35 000 VMs 15 000 VMs per vRO Node in Cluster Mode |

20 vCenters | Single SSO domain | vSphere 6 Configuration Maximums |

| NSX 6.2.7 | vCenter limits | 1 vCenter per 1 NSX Manager | vSphere 6 Configuration Maximums | |

| vROps 6.5 | 150 000 Objects (with fully loaded vROps, 16 Large nodes) | 50 vCenter Adapter instances 50 Remote Collectors |

vROPs 6.5 Sizing Guidelines | |

| Log Insight 4.3 | No VM limitations, only Events Per Second matter | 10 vCenters | 10 Forwarders, 1 AD, 2 DNS Servers, 1 vROps | Log Insight Administration Guide Log Insight Calculator |

| vSAN 6.2 | 200 VMs per Host 6400 VMs per Cluster 6400 Powered On VMs |

64 Hosts per Cluster | 1 Datastore per Cluster 1 Cluster per VxRail system |

vSphere 6 Configuration Maximums vSAN Configuration Limits |

| vRB for Cloud 7.3 | 20 000 VMs | 10 vCenters | vRealize Business for Cloud Scalability | |

| Avamar 7.4 | No fixed limit, depends on data change rate, backup windows and amount of Proxies | 15 vCenters (can go higher) | Maximum of 48 Proxies 8 concurrent backups per Proxy |

Avamar 7.4 for VMware User Guide EMC KB 411536 |

| VPLEX / vMSC 6.0 P1 | 10000 Powered on VMs 15000 Registered VMs |

Follows vCenter limits | vSphere 6 Configuration Maximums | |

| RecoverPoint 4.4 SP1 P1 / SRM 6.1.2 | 5000 VMs | 1 vCenter pair allowed in EHC | Can recover max 2000 VMs simultaneously | VMware KB 2105500 |

| RecoverPoint for VMs 5.0.1.2 | 1024 individually protected VMs 5120 VMs per vCenter Pair 10240 VMs across EHC |

2 vCenter Pairs in EHC 32 ESXi hosts per cluster |

Recommended max 512 VMs per vSphere cluster with 4 vRPA clusters If EHC Auto Pod is protected with RP4VM, 896 CGs left for Tenant workloads |

RP4VM Scale and Performance Guide |

| vRA Mgmt Pack 2.2 | 8000 VMs (with vRA 7 / EHC 4.1.x) | Mgmt pack v.2.0+ | vRA Mgmt Pack Release Notes | |

| NSX Mgmt Pack 3.5 | 2000 VMs 300 Edges (will scale beyond) |

Mgmt pack v.3.5+ | NSX Mgmt Pack Release Notes |

Nice work Joni.. demonstrates that building your own cloud infrastructure is complex and hard work and requires one to pay attention to the details across a large number of products with forethought on use cases that will be leverage in a single site was well as across a multisite deployment

LikeLike

Pingback: Useful VMware Resources | Scamallach